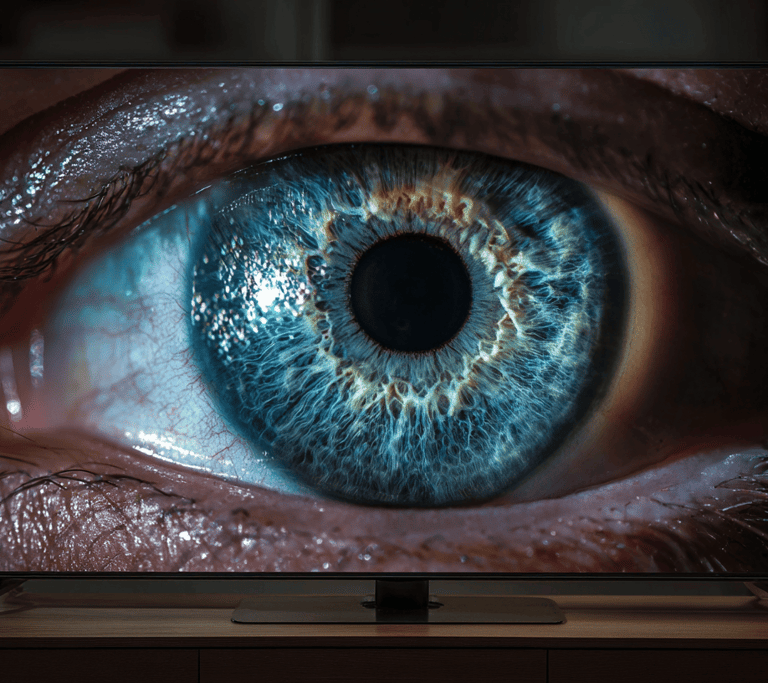

When Algorithms Know You Better Than You Know Yourself

SURVEILLANCE & SOCIETY

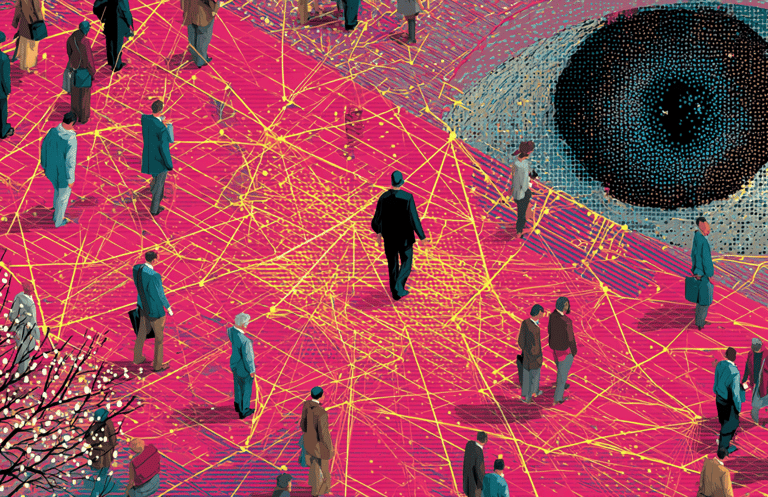

In the aftermath of September 11, 2001, as America grappled with new threats and security challenges, a small startup emerged with CIA seed funding and a bold promise: to make sense of vast oceans of data that human analysts could never navigate alone.

That company was Palantir Technologies, named after the all-seeing stones from Tolkien's Lord of the Rings—a fitting metaphor for what would become one of the world's most powerful and controversial surveillance platforms.

Today, Palantir operates at the center of what CEO Alex Karp calls an "AI revolution," with the company's stock soaring over 300% in 2024 and becoming one of the best-performing members of the S&P 500.

The company's three primary products—Gotham for intelligence and law enforcement, Foundry for corporate analytics, and Apollo for system deployment—have fundamentally altered how institutions observe, analyze, and act upon human behavior.

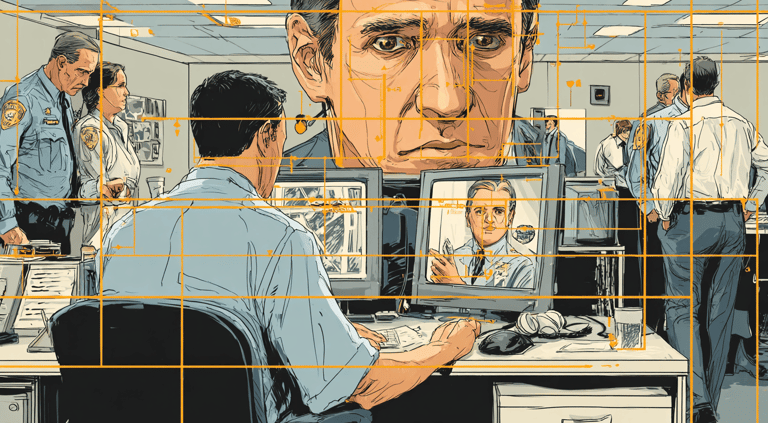

The Company That Sees Everything

Understanding Palantir Technologies and the psychological transformation of surveillance capitalism

But Palantir's recent expansion reveals a darker trajectory. In 2025, the company secured a $30 million contract with Immigration and Customs Enforcement to build ImmigrationOS, a platform designed to provide "near real-time visibility" on deportations, track visa overstays, and identify individuals for removal.

The company has received over $900 million in federal contracts since the current administration took office, cementing its role not just as a data analytics provider, but as the technological backbone of state surveillance.

This transformation raises a fundamental question at the heart of cyberpsychology: What does it mean for the human mind to be known, judged, and potentially manipulated by invisible systems operating at unprecedented scale?

The Digital Self - Who You Are in Data

From Person to Profile

Palantir's Artificial Intelligence Platform (AIP), launched in mid-2023, represents a new frontier in how organizations understand human behavior.

The platform doesn't just analyze data—it creates comprehensive digital identities by integrating information from hundreds or thousands of sources simultaneously.

Your morning commute, your purchase history, your social connections, your location patterns, and your online activities merge into a behavioral signature that claims to predict your future actions.

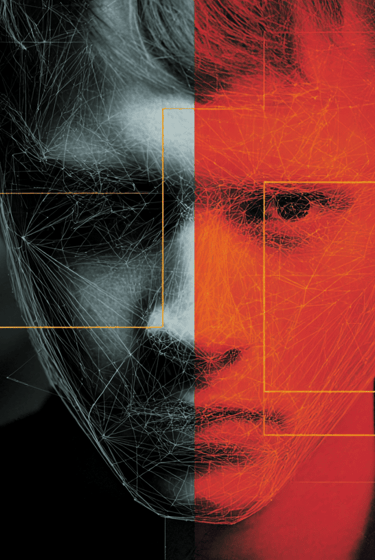

This is what psychologists call the digital self—a data-driven reflection of who we are. But this mirror is fundamentally distorted. Our digital selves are not neutral representations; they are constructed through the specific data points that surveillance systems choose to collect, the algorithms that process this information, and the institutional priorities that shape what gets measured.

The Psychological Toll of Constant Monitoring

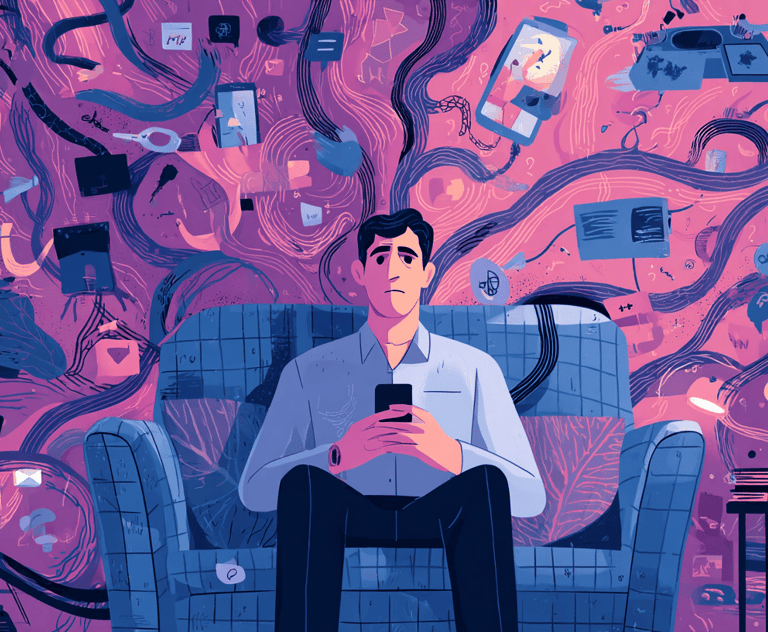

Living under algorithmic observation creates profound psychological adaptations. Research in cyberpsychology identifies several responses to continuous surveillance:

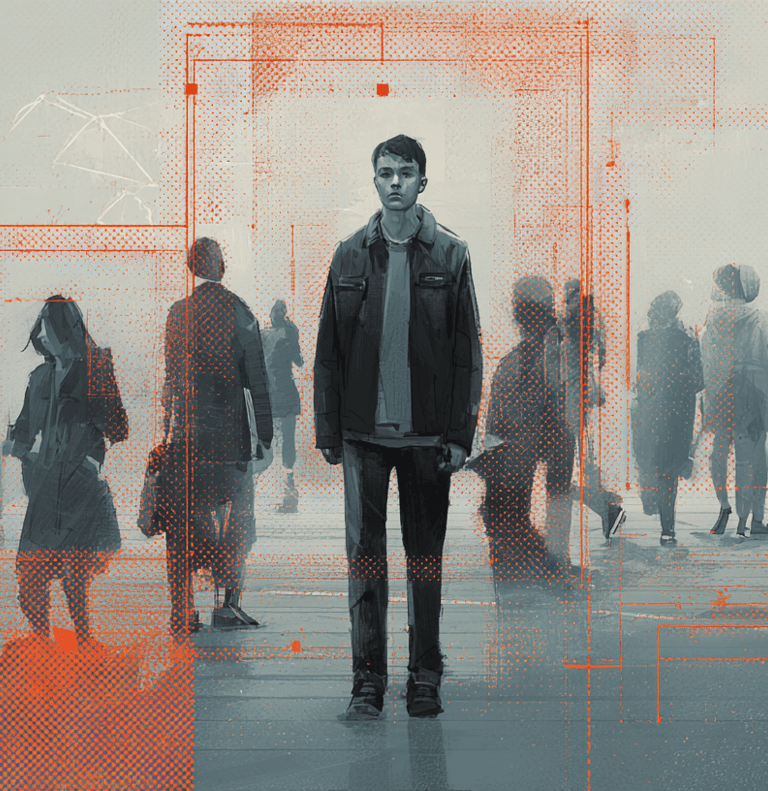

Hypervigilance emerges when individuals become acutely aware of their digital traces, constantly monitoring their own behavior to avoid creating concerning patterns. People begin to question whether their searches, purchases, or travel routes might flag them as suspicious in systems they cannot see or understand.

Identity fragmentation occurs when people internalize the categories that algorithmic systems generate. Instead of experiencing identity as a unified whole, individuals may begin to see themselves through data labels: high-risk, low-credit, frequent-flyer, suspicious-pattern. These external classifications become internalized aspects of self-concept.

Surveillance resignation represents perhaps the most common response—a state where people accept monitoring as inevitable rather than actively managing their digital exposure. This isn't simply laziness; it's a rational psychological adaptation to an impossible situation where every service, every app, and every interaction collects data.

How Algorithmic Judgment Shapes Reality

Palantir's ImmigrationOS system exemplifies the psychological stakes of predictive technology. The platform aggregates data from passport records, Social Security files, IRS tax information, and license plate readers to identify individuals for deportation.

This isn't reactive law enforcement responding to specific violations—it's proactive targeting based on patterns and predictions.

The psychological concept of algorithmic determinism describes what happens when systems treat statistical predictions as inevitable outcomes.

When Palantir's tools flag someone as "high-risk" or identify them as likely to overstay a visa, that classification shapes how institutions interact with that person—often creating the very outcomes the algorithm predicted.

Predictive Power and Pre-Crime Psychology

The Self-Fulfilling Prophecy of Surveillance

Research in social psychology demonstrates that labels powerfully shape behavior. When law enforcement systems flag someone as high-risk, this classification can become self-fulfilling.

Increased attention leads to more interactions with authorities, creating additional data points that reinforce the original assessment. The person hasn't changed—but the surveillance has created a new reality around them.

For immigrant communities targeted by ImmigrationOS, this creates chronic psychological stress. The knowledge that digital activities could lead to deportation forces families to limit technology use, avoid certain locations, and constantly monitor their behavior for potentially dangerous patterns.

Children in mixed-status families grow up never knowing whether their parents' data trail will trigger an enforcement action.

The Illusion of Consent and Control

Living Under Probabilistic Judgment

Perhaps most troubling is what we might call temporal guilt—the psychological burden of being held responsible not for actions taken, but for actions you're statistically predicted to take.

Traditional justice systems operate on the principle that people are responsible for their actual behavior. Predictive systems suggest that people can be targeted for actions they haven't committed but are likely to commit.

This fundamentally undermines human agency—the capacity to make choices and change one's circumstances. When algorithms claim to know your future with statistical certainty, they create a psychological prison where the future feels predetermined.

How "Agreement" Became Meaningless

Palantir's systems pull data from across government databases, including sources where individuals never explicitly consented to surveillance for immigration enforcement purposes.

But even in commercial contexts where consent forms exist, the reality of digital agreement has become psychologically compromised.

The typical user experience exploits present bias—the psychological tendency to prioritize immediate rewards over long-term consequences.

When someone wants to use a new app or access information quickly, they're likely to accept data collection terms without fully considering the implications. The consent is technically valid but psychologically manipulated.

The Granularity Problem

The most significant privacy invasions don't come from any single data point, but from aggregation. Your coffee purchase, your commute route, your news reading habits, and your social media activity create a behavioral signature that reveals intimate details about your health, relationships, political views, and financial situation.

No single data point requires explicit consent for this level of analysis, yet the combined picture may be more revealing than anything you would consciously choose to share.

This creates consent fragmentation—a situation where users cannot meaningfully evaluate the implications of their choices because the most significant privacy invasions emerge from data combinations rather than individual disclosures.

Privacy Fatigue as Learned Helplessness

When every app, website, and service collects data, when privacy policies are incomprehensible, and when opting out means losing access to essential services, maintaining privacy requires constant vigilance and expertise that most people cannot reasonably provide.

The result is privacy fatigue—a psychological state resembling learned helplessness where people stop attempting to control their circumstances because past efforts have felt ineffective.

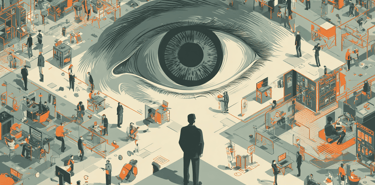

When Power Becomes Incomprehensible

Palantir's business model depends on opacity. The company's contracts with government agencies are often classified, its algorithms are proprietary, and its decision-making processes are protected by trade secrets and national security claims. This creates what psychologists call unpredictability stress—anxiety that emerges when we cannot form mental models of the systems that govern our environment.

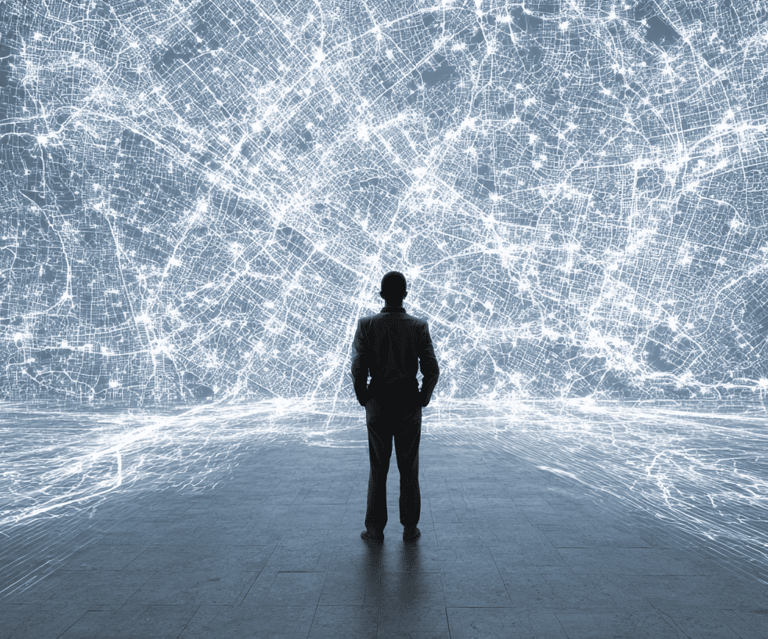

Humans have evolved sophisticated psychological mechanisms for dealing with visible threats and understanding social hierarchies. We can navigate complex social dynamics because these systems operate within our perceptual and cognitive capabilities.

Algorithmic systems represent a fundamentally different form of power—invisible, incomprehensible, and operating at scales that dwarf human cognition.

The Black Box Problem and Invisible Power

The Erosion of Trust

Trust represents one of the most fundamental human psychological needs. From early childhood, humans develop trust through predictable relationships where causes and effects can be understood, where feedback is available, and where repair is possible when things go wrong. These basic psychological requirements for trust are systematically violated by opaque algorithmic systems.

When Palantir's tools influence immigration decisions, healthcare resource allocation, or criminal justice outcomes, the people affected have no way to understand why certain decisions were made, no mechanism for providing feedback, and no process for appeal or correction. This violates basic human needs for predictability and fairness.

Democratic Helplessness

Democracy depends not just on formal voting mechanisms, but on psychological conditions that allow citizens to feel capable of meaningful participation in social decision-making.

When crucial decisions about law enforcement, healthcare, and social services are made by opaque algorithms, citizens lose the ability to understand, evaluate, or influence these systems.

This creates democratic helplessness—the feeling that civic participation is meaningless because the most important decisions are made by systems beyond public control. The result may be political disengagement or authoritarian appeals that promise to restore human agency to social governance.

The AI Revolution and Human Consciousness

Palantir's CEO describes the company as being in "the earliest stages, the beginning of the first act, of a revolution that will play out over years and decades".

The company's Artificial Intelligence Platform has driven explosive growth, with U.S. commercial revenue increasing by over 90% year-over-year in recent quarters. This isn't just corporate expansion—it represents the normalization of algorithmic judgment across society.

Palantir's Vision for Tomorrow's Human Psychology

Hyper-Personalized Governance

As data collection and analysis capabilities advance, we may be approaching a future where governance becomes hyper-personalized—where laws, regulations, and social services are tailored to individual risk profiles and behavioral predictions.

Instead of shared rights and responsibilities that apply equally to all citizens, people would exist in personalized regulatory environments designed around their specific data patterns.

The psychological implications are profound. Human identity has historically been shaped by membership in communities with shared norms, values, and expectations. Hyper-personalized governance could create a society of isolated individuals, each living under different rules and expectations, making collective identity and social solidarity increasingly difficult to maintain.

The Question of Human Agency

The central psychological question raised by Palantir's trajectory is whether human consciousness can maintain autonomy under comprehensive algorithmic observation.

Throughout history, humans have developed identity through choice, built meaning through uncertainty, and maintained hope through the belief that we can change and grow.

Algorithmic prediction threatens these fundamental psychological processes. When systems claim to know our future behavior with statistical certainty, they undermine the very uncertainty that makes human agency meaningful.

When Monitoring Becomes Normal

Some researchers argue that we are entering an era of surveillance realism—a psychological state where pervasive monitoring becomes so normalized that people stop experiencing it as problematic.

This represents a form of psychological adaptation where humans adjust their expectations and behaviors to accommodate surveillance rather than resisting it.

Palantir's stock performance and rapid expansion suggest that surveillance capitalism has moved from controversy to mainstream acceptance. When a company's market value exceeds $400 billion based primarily on its ability to surveil and predict human behavior, it signals a fundamental shift in social values.

Conclusion - The Mind Under the Algorithm

Palantir's Expansion - From Intelligence to Everywhere

2003: Palantir founded with seed funding from In-Q-Tel (CIA's venture capital arm)

2008: First major government contract with U.S. Army for battlefield intelligence

2014: DHS partnership begins with case management system for immigration enforcement

2020: Direct listing on NYSE; initial market value of $16.5 billion

2023: Launch of Artificial Intelligence Platform (AIP), triggering commercial revenue acceleration

2024: Stock soars 340%, joining S&P 500 and Nasdaq-100; market value exceeds $400 billion

2025: $30 million ImmigrationOS contract with ICE; over $900 million in federal contracts since January

Present: Market capitalization exceeds $430 billion, positioning Palantir as one of the world's most valuable software companies

Palantir Technologies represents more than a successful software company—it embodies a fundamental transformation in how power operates in the 21st century. As data becomes the primary lens through which institutions see individuals, and as algorithmic analysis shapes an increasing number of decisions that affect human lives, we face profound questions about consciousness, agency, and what it means to be human in a data-governed world.

The psychological implications are far-reaching: chronic anxiety from living under constant surveillance, erosion of autonomous decision-making, fragmentation of digital identity, and normalization of algorithmic judgment. These changes are reshaping human consciousness in ways we're only beginning to understand.

Palantir's meteoric rise—from controversial government contractor to Wall Street darling—signals that surveillance capitalism has moved from fringe concern to mainstream acceptance. When a company's primary value proposition is its ability to surveil, analyze, and predict human behavior, and when that company becomes one of the most valuable in the world, it reveals something essential about the society we're building.

But human psychology is not passive. Throughout history, people have found ways to preserve dignity, maintain agency, and build meaningful communities even under conditions of surveillance and control. The challenge now is to develop new forms of psychological resistance and adaptation that can preserve what is most valuable about human experience.

This requires both individual awareness and collective action. On the individual level, understanding how surveillance systems work and how they affect human psychology can help people make more informed choices. On the collective level, democratic societies must grapple with fundamental questions: What kind of future do we want to create? What role should algorithmic systems play in human governance? Who benefits when human behavior becomes predictable and controllable?

The conversation about surveillance technology and human psychology is just beginning. As systems like Palantir's become more powerful and pervasive, the stakes for human consciousness and social life will only grow higher. The time for engagement is now, while we still have the agency to shape the future rather than simply adapt to it.

The mind in the machine is not just a metaphor—it represents the lived reality of billions of people whose thoughts, feelings, and behaviors are increasingly shaped by systems they cannot see, understand, or control. But humans remain more complex, creative, and resilient than any algorithmic system can capture.

What are your thoughts on the psychological implications of surveillance technology? How do you think we can preserve human agency while engaging with the realities of algorithmic governance? Share your perspectives and join the ongoing conversation about the future of human consciousness in the digital age.

How Smart Tech Is Rewiring Trust, Privacy, and the Human Psyche

Explore how smart TVs, voice assistants, and connected devices are psychologically rewiring our relationship with privacy, trust, and autonomy.

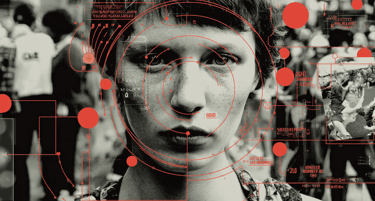

The Cyberpsychology of Facial Recognition in a Post-Privacy World

Explore how facial recognition technology is reshaping human psychology and behavior.

The Psychology of Online Communities - How Digital Tribes Shape Behavior and Belonging

This article examines how digital tribes fulfill our fundamental need for connection in modern society.