The Invisible Hand - How Artificial Intelligence Shapes Human Behavior

AI & US

Introduction

"Hey Siri, what's the weather today?" "Alexa, add milk to my shopping list." "Based on your viewing history, you might enjoy this show..." These familiar interactions mark the quiet integration of artificial intelligence into our daily routines. By 2024, the average person interacts with AI systems more than 100 times daily—often without conscious awareness.

Behind these seemingly innocuous exchanges lies a profound reality: artificial intelligence has become an invisible hand guiding human behavior. From the content we consume to the products we purchase, the opinions we form, and even the emotions we experience, AI systems increasingly shape our decisions, feelings, and thought patterns. This influence extends far beyond mere convenience, potentially altering the very fabric of human psychology and social interaction.

This article explores the multifaceted relationship between AI systems and human behavior—examining how recommendation algorithms, AI companions, and automated decision systems influence our choices, emotional responses, and cognitive processes. As we navigate this evolving landscape, understanding these dynamics becomes essential not only for researchers and technologists but for anyone seeking agency in an algorithmically mediated world.

How AI Influences Decision-Making, Emotions, and Thought Patterns

The Architecture of Influence: AI and Decision-Making

Every day, we make approximately 35,000 decisions. Increasingly, AI systems play a role in shaping these choices through sophisticated recommendation engines, predictive analytics, and decision-support tools.

The Recommendation Engine Effect

Platforms like Netflix, Amazon, and Spotify employ complex algorithms that analyze user behavior patterns to predict preferences. These systems don't merely respond to our explicit choices—they actively shape future selections through a feedback loop. When Netflix suggests your next binge-worthy series or Spotify curates your "Discover Weekly" playlist, these recommendations narrow the field of possibilities, creating what researchers call "choice architecture."

Cognitive psychologists at leading institutions have observed that recommendation algorithms don't simply predict our preferences—they actively participate in creating them through repeated exposure and reinforcement. This process gradually blurs the line between discovering what we genuinely want and having those preferences manufactured for us.

Consider the statistics: users select algorithm-recommended content on streaming platforms approximately 80% of the time. This pattern extends to online shopping, where 35% of Amazon's revenue comes from personalized recommendations. The narrowing effect of these recommendations creates a "filter bubble" that can significantly limit exposure to diverse options or viewpoints.

Decision Delegation and Automation

Beyond recommendations, AI systems increasingly make decisions on our behalf. From smart thermostats that adjust temperatures based on our habits to email systems that prioritize our inbox, these automated decisions accumulate, creating what researchers term "automation dependency." Studies show that people increasingly defer to algorithmic judgments even when they have reason to distrust them—a phenomenon known as "automation bias."

This delegation of decision-making authority presents both benefits and risks. While it reduces cognitive load and decision fatigue, it may simultaneously atrophy decision-making skills through disuse. As autonomous vehicles, smart homes, and AI assistants continue expanding their decision domains, the implications for human agency become increasingly significant.

Emotional Algorithms: AI and Affective Experience

Perhaps more surprising than AI's influence on decision-making is its growing impact on human emotional experiences. AI companions and emotionally intelligent systems are designed to detect, respond to, and even shape our emotional states.

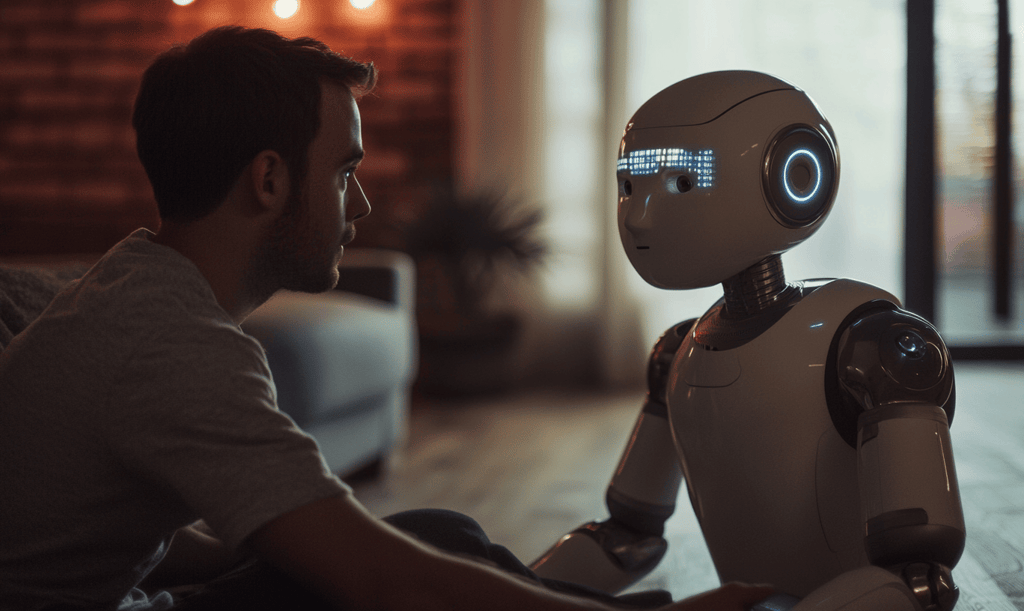

AI Companions and Emotional Attachment

Applications like Replika, Character.AI, and therapeutic chatbots create interactions designed to foster emotional connection. These AI companions employ natural language processing and sentiment analysis to simulate empathy, creating experiences that trigger genuine emotional responses in users.

The psychological impact of these relationships can be profound. A 2023 study by the University of California found that 42% of regular AI companion users reported feeling "meaningful emotional connections" with their digital counterparts. For some users—particularly those experiencing social isolation—these AI relationships provide valuable emotional support. However, they also raise questions about the authenticity of algorithmically mediated emotional experiences.

Digital anthropologists studying these interactions note that while AI companions don't actually experience emotions—merely simulating responses based on data patterns—the human brain processes these exchanges as genuine social experiences. Our neural architecture, evolved to respond to social cues rather than verify their underlying authenticity, often cannot distinguish between authentic human empathy and well-designed algorithmic simulations of it.

Mood Management Through Algorithmic Content

Beyond explicit AI companions, content recommendation systems subtly influence emotional states through the media they promote. Social media algorithms learn which content types generate the strongest engagement—often prioritizing material that evokes high-arousal emotions like outrage, anxiety, or amusement.

This algorithmic emotion management extends to music streaming services that offer mood-based playlists and news aggregators that curate content based on emotional engagement metrics. The cumulative effect is a form of emotional steering, where algorithms increasingly influence not just what information we consume, but how we feel about it.

Cognitive Patterning: AI and Thought Processes

Perhaps the most profound influence of AI systems lies in their potential to shape human thought patterns and cognitive processes. Through prolonged interaction with algorithmic systems, subtle shifts in information processing, attention allocation, and reasoning may emerge.

Echo Chambers and Belief Reinforcement

Recommendation systems that prioritize content similar to previously consumed material create self-reinforcing information loops. These "echo chambers" not only limit exposure to diverse viewpoints but actively strengthen existing beliefs through repeated exposure to confirming information.

This cognitive reinforcement impacts not just political polarization (as commonly discussed) but extends to consumer preferences, lifestyle choices, and identity formation. The personalized web experience creates what researchers call "reality tunnels"—individualized information environments that can significantly diverge from those of others, even within the same household.

Attention Engineering and Cognitive Processing

AI-driven platforms compete aggressively for user attention, employing sophisticated techniques to maximize engagement. Features like infinite scrolling, variable reward mechanisms, and algorithmically timed notifications exploit cognitive vulnerabilities to capture and retain attention.

The consequences extend beyond mere distraction. Research indicates that prolonged interaction with certain AI systems may alter information processing styles, favoring rapid, shallow processing over deep, sustained engagement. A 2022 study from University College London found that heavy users of algorithm-driven platforms demonstrated measurable changes in attention allocation patterns even when away from these platforms.

Psychological Risks and Benefits of AI Companions, Chatbots, and Recommendation Algorithms

Benefits: The Positive Potential of AI Influence

Enhanced Efficiency and Personalization

AI assistants and recommendation systems dramatically reduce cognitive load by handling routine tasks and filtering overwhelming information volumes. This efficiency dividend allows users to redirect mental resources toward more meaningful activities and decisions.

The personalization capabilities of modern AI systems also enable tailored experiences that can better align with individual needs, preferences, and learning styles. In educational contexts, adaptive learning systems respond to student performance in real-time, adjusting difficulty levels and teaching approaches accordingly.

Mental Health Support and Accessibility

AI-powered mental health applications have demonstrated promising results in providing support for conditions like depression, anxiety, and PTSD. These tools offer several advantages over traditional therapy:

Accessibility: Available 24/7 without appointment barriers

Affordability: Often more cost-effective than human therapists

Reduced stigma: Some users report feeling more comfortable discussing sensitive issues with AI systems

A 2023 meta-analysis of 28 studies found that AI-assisted cognitive behavioral therapy produced moderate positive outcomes comparable to some traditional therapeutic approaches, particularly for mild to moderate conditions. For those unable to access human therapists due to cost, location, or availability constraints, these tools provide valuable support options.

Cognitive Enhancement and Learning Acceleration

AI systems increasingly function as cognitive augmentation tools, extending human capabilities through partnerships rather than replacement. Language models assist with writing and ideation, visualization tools help conceptualize complex data, and knowledge management systems enable more efficient information retrieval and synthesis.

In educational contexts, these technologies enable personalized learning pathways that adapt to individual student needs, potentially addressing longstanding challenges in educational equity. Studies indicate that well-designed AI tutoring systems can reduce learning time by up to 30% while improving retention compared to traditional one-size-fits-all approaches.

Risks: The Shadow Side of Algorithmic Influence

Cognitive Dependency and Skill Atrophy

As AI systems increasingly handle cognitive tasks, concerns emerge about potential skill degradation through disuse. Navigation apps may weaken spatial reasoning abilities; calculation tools might reduce numerical fluency; and writing assistants could potentially impact composition skills.

This dependency creates vulnerability—when systems fail or become unavailable, users may struggle with tasks they once performed independently. More concerning is the potential for what researchers term "cognitive offloading," where people become less inclined to engage in demanding mental processes when AI alternatives exist.

Manipulation and Exploitative Design

Many AI systems, particularly those with advertising-based business models, employ psychological techniques specifically designed to maximize engagement, often at the expense of user wellbeing. These manipulative practices include:

Variable reward mechanisms that create addiction-like engagement patterns

Loss aversion techniques that generate fear of missing out

Social comparison features that exploit status anxiety

Infinite content designs that eliminate natural stopping cues

These practices often operate beneath conscious awareness while actively exploiting cognitive vulnerabilities. The asymmetry between sophisticated influence systems designed by teams of behavioral scientists and individual users creates an uneven power dynamic that raises serious ethical concerns.

Emotional Dependency and Relationship Displacement

For some users, AI companions fulfill emotional needs while potentially reducing motivation to develop or maintain human relationships. While these systems offer valuable support for those experiencing isolation, they may simultaneously complicate the development of human social skills and connections.

Particularly concerning is the phenomenon of "algorithmic intimacy"—where users develop attachment patterns with AI systems that mimic human relationships without the reciprocity, growth potential, or mutual vulnerability that characterizes authentic human connection. For developing minds, especially children and adolescents, these relationships may create problematic models for understanding and navigating human interactions.

The Ethical Implications of AI's Role in Daily Life

The Autonomy Question: Choice Architecture vs. Manipulation

Central to ethical discussions about AI influence is the matter of human autonomy—the capacity to make independent, self-determined choices. AI systems increasingly operate as "choice architects," shaping the decision environment in ways that nudge users toward particular selections.

When does beneficial personalization cross into manipulation? The boundary often lies in transparency and user awareness. Systems that disclose their influence mechanisms and provide genuine alternatives preserve autonomy, while those operating through hidden influence techniques potentially undermine it.

Philosophers specializing in technology ethics emphasize that meaningful autonomy requires more than just the formal freedom to choose—it demands awareness of the factors shaping those choices. AI systems that function as inscrutable "black boxes" of influence fundamentally compromise user autonomy by concealing their impact on decision-making processes, regardless of whether their intentions are benevolent.

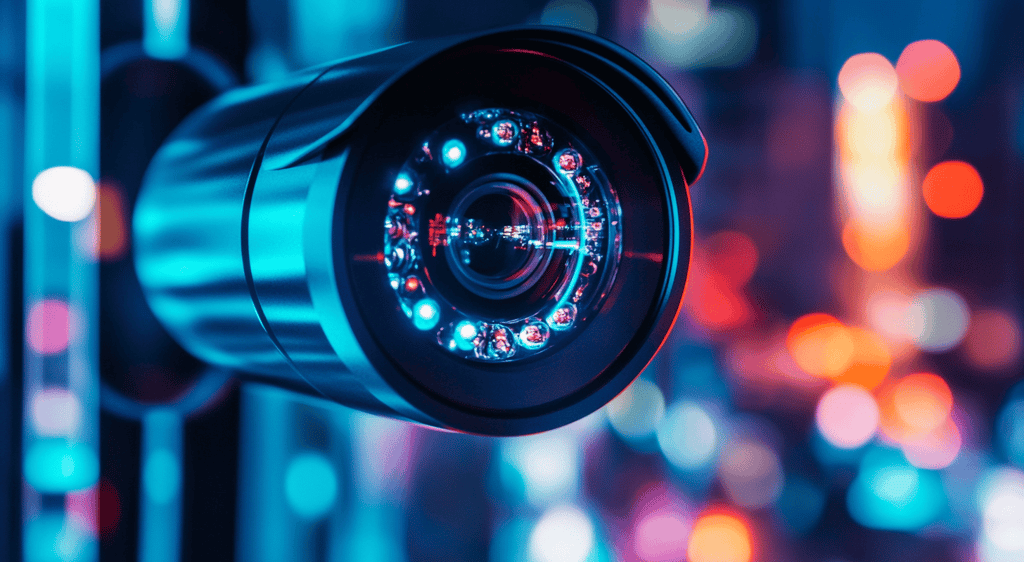

Data Privacy and Surveillance Capitalism

AI influence systems rely on vast datasets detailing personal behaviors, preferences, and even emotional responses. This data collection creates a surveillance infrastructure that potentially threatens privacy and enables increasingly precise behavioral prediction and influence.

The business model underlying many AI systems—what Harvard professor Shoshana Zuboff terms "surveillance capitalism"—treats human experience as raw material for behavioral prediction products. This model creates powerful economic incentives for expanding surveillance and influence capabilities, often prioritizing engagement metrics over user wellbeing.

AI Bias and Algorithmic Inequality

AI systems inherit and potentially amplify biases present in their training data and design choices. Recommendation algorithms may reinforce stereotypes, limit opportunities based on demographic factors, or create disparate impact across different populations.

These biases manifest across various domains:

Employment: AI recruitment tools demonstrating gender and racial biases

Criminal justice: Risk assessment algorithms showing racial disparities

Healthcare: Diagnostic systems performing differently across demographic groups

Financial services: Lending algorithms perpetuating historical discrimination patterns

The risk extends beyond individual discrimination to systemic reinforcement of existing inequalities. When recommendation systems shape educational opportunities, career paths, and information access differently across population groups, they potentially widen existing socioeconomic gaps.

The Human-Machine Boundary

As AI systems become more sophisticated in simulating human-like interaction, the distinction between authentic human connection and algorithmically generated experiences increasingly blurs. This convergence raises profound questions about meaning, authenticity, and the nature of relationships.

Psychologists studying human-AI interactions observe that genuine human connections are founded on mutual understanding, shared vulnerability, and reciprocal growth—qualities that AI systems can simulate but not truly possess. This creates an experiential gap where interactions feel authentic on the surface while lacking the fundamental elements that give human relationships their depth and meaning.

This blurring challenges fundamental assumptions about social and emotional development. If formative relationships increasingly include AI systems, how might this reshape expectations for human interaction? As virtual companions become more sophisticated, what constitutes an authentic relationship may require reconsideration.

Conclusion: Navigating the AI-Influenced World

The relationship between AI systems and human behavior represents one of the most significant psychological frontiers of our time. These technologies offer remarkable benefits—expanded capabilities, personalized experiences, and support previously impossible at scale. Simultaneously, they present novel risks to autonomy, cognition, emotional wellbeing, and social development.

Understanding this influence landscape is essential not only for researchers, ethicists, and technologists, but for anyone navigating the algorithmically mediated world. Digital literacy increasingly requires not just technical knowledge but "influence literacy"—the ability to recognize and respond thoughtfully to algorithmic nudges and personalization effects.

As we move forward, several principles may guide more beneficial human-AI relationships:

Transparent influence: AI systems should disclose their recommendation mechanisms and personalization effects.

Meaningful control: Users deserve accessible options to modify how algorithms curate their experience.

Diversity mechanisms: Systems should include features that periodically introduce diverse viewpoints and unexpected content.

Wellbeing metrics: Success measures should extend beyond engagement to include indicators of user satisfaction and psychological wellbeing.

The future relationship between human minds and artificial intelligence remains unwritten. By approaching these systems with both appreciation for their benefits and awareness of their influence mechanisms, we can work toward a future where AI serves as a genuine complement to human flourishing rather than a competitor for human agency.

As you close this article and continue your digital journey, consider: How might the AI systems you regularly engage with be shaping your choices, emotions, and thought patterns? And how might greater awareness of these influences change how you interact with them?

Sidebar: Maintaining Agency in an Algorithmic World

Practical steps for thoughtful engagement with AI systems:

Audit your AI interactions: Periodically review which AI systems influence your daily life and how they might shape your choices.

Diversify information sources: Intentionally seek viewpoints outside your algorithmic recommendations.

Schedule algorithm-free time: Designate periods for unmediated thinking and experiences.

Adjust privacy settings: Review and limit data collection where possible to reduce prediction accuracy.

Practice metacognition: When making decisions, ask yourself "Why am I choosing this? Is this my preference or an algorithmically suggested one?"

Support ethical AI development: Favor products and platforms that prioritize transparency and user wellbeing.