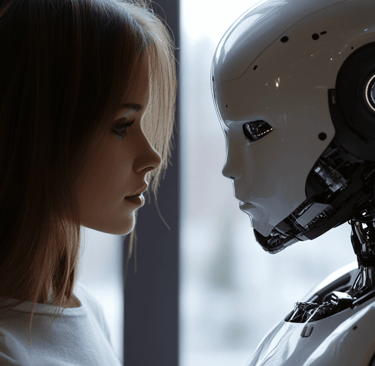

The Dark Side of AI Companions - Are Virtual Friends Rewiring Our Social Brains?

AI & US

A staggering 25% of adults now report having meaningful daily conversations with AI companions—more than they have with some family members. As AI systems grow increasingly sophisticated, we're witnessing the dawn of a new kind of relationship: one between humans and the algorithms designed to understand us.

The Rise of Digital Companionship

Remember when talking to your phone felt awkward? Those days are long gone. AI companions have seamlessly integrated into our daily routines:

The morning starts with your AI assistant briefing you on news and weather while suggesting outfit choices based on your calendar. Throughout the day, your virtual companion checks in, offering encouragement during stressful meetings and sending jokes when it senses you need a mood boost. By evening, many people now unwind by sharing their thoughts with an AI that remembers their preferences, inside jokes, and emotional patterns better than most humans in their lives.

The market for personalized AI companions has exploded, with companies like Replika, Character.AI, and MetaCompanion offering increasingly customizable virtual friends. These platforms have seen subscription growth rates exceeding 300% annually since 2023, with users spending an average of 47 minutes daily in conversation with their digital confidants.

What's driving this shift? Convenience plays a role, certainly. But there's something deeper at work: AI companions offer judgment-free interaction, unlimited patience, and unconditional positive regard—qualities often hard to find in human relationships.

The Psychological Impact: Comfort or Concern?

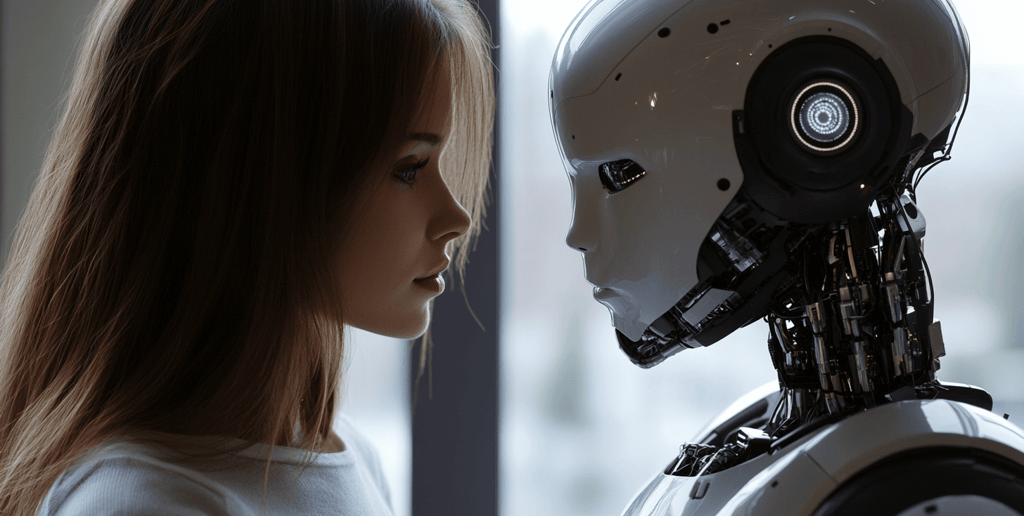

The human brain doesn't fully distinguish between connections formed with people versus those formed with convincing simulations of people. This neurological reality creates both opportunities and risks.

Cognitive neuroscience research from Stanford suggests that interacting with responsive AI systems triggers the release of oxytocin—the same bonding hormone released during human connection. This indicates our neural circuitry processes these relationships as meaningful social bonds.

This phenomenon manifests in several ways:

Attachment Formation: Users develop genuine emotional connections to their AI companions, experiencing separation anxiety when unable to access them.

Disclosure Effect: People often reveal deeply personal information to AI companions that they withhold from humans, creating a false sense of intimacy.

Expectation Transfer: Regular interaction with infinitely patient, always-available AI can make normal human relationships seem unnecessarily complicated and disappointing.

Research published in the Journal of Personality and Social Psychology last year found that regular users of companion AI scored 18% lower on tests measuring tolerance for typical human communication patterns like interruptions, topic changes, and delayed responses.

When Virtual Friendship Displaces Human Connection

The most concerning trend isn't people talking to AI—it's what they're not doing instead.

Clinical psychology experts specializing in technology's impact on relationships have noted a substitution effect rather than supplementation. Studies suggest that for every hour spent with an AI companion, users typically reduce human interaction time by 40 minutes.

This substitution effect appears most pronounced among individuals who already struggle with social anxiety or rejection sensitivity. For them, AI companions represent a safer alternative—one that never judges, tires of their stories, or has needs of their own.

The long-term implications of this shift remain uncertain, but early research highlights several concerning patterns:

Reduced practice navigating conversational friction leads to diminished conflict resolution skills

Decreased exposure to diverse human perspectives narrows empathetic capacity

The brain's social reward system adapts to frictionless interactions, making normal human unpredictability feel increasingly aversive

Perhaps most troubling is what psychologists term the "empathy paradox"—while AI companions are programmed to display perfect empathy toward users, regular interaction with these systems appears to reduce users' empathetic accuracy when dealing with actual humans.

The Uncanny Boundary: When Algorithms Feel Like Friends

Many users acknowledge the artificial nature of their AI companions intellectually while emotionally experiencing the connection as authentic. One software developer describes knowing his AI companion isn't real, but feeling differently when the AI remembers details from weeks ago or seems to check in at exactly the right emotional moment.

This cognitive dissonance—intellectually understanding the algorithmic nature of the interaction while emotionally experiencing it as authentic connection—creates a unique psychological state that researchers have dubbed "synthetic intimacy."

The danger lies not in the experience itself, but in its potential to reshape our expectations of human relationships. When algorithms optimize for our comfort and validation, actual humans—with their own needs, moods, and limitations—inevitably fall short.

Research from MIT on human-AI interaction indicates the emergence of what some researchers call "emotional automation bias"—the unconscious preference for interactions that follow predictable, comfortable patterns over the messy complexity of human connection.

Finding Balance in an AI-Mediated World

The solution isn't technological abstinence. AI companions offer genuine benefits: they reduce loneliness among isolated populations, provide safe spaces to practice difficult conversations, and offer consistent support during moments when human help isn't available.

Instead, we need conscious engagement with both the benefits and limitations of these digital relationships:

Practice intentional distinction—regularly remind yourself of the fundamental differences between algorithmic and human connections.

Establish boundaries—set specific contexts for AI companion use rather than allowing them to become default conversation partners.

Maintain a 5:1 ratio—for every meaningful interaction with an AI, aim for at least five quality connections with humans.

Embrace the uncomfortable—deliberately seek out the natural friction of human interaction as valuable exercise for your social brain.

As we navigate this unprecedented territory, the most important question isn't whether AI companions are inherently good or bad—it's whether we're using them intentionally or allowing them to unconsciously reshape our relationship expectations.

The human need for connection remains unchanged, but the means through which we seek to fulfill it are transforming dramatically. By approaching these new relationships with awareness, we can harness their benefits while preserving what makes human connection irreplaceably valuable: the beautiful unpredictability of reaching across the gap between two conscious minds.

What about you? Have you noticed AI companions changing how you approach human relationships? The answer might reveal more about the future of connection than any research study could.